What is a Cloud GPU ?

A Cloud GPU provides powerful graphics processing over the internet, offering fast, scalable computing for AI, machine learning, rendering, and high-performance workloads. It removes the need for on-site hardware and supports flexible, on-demand performance across a wide range of tasks.

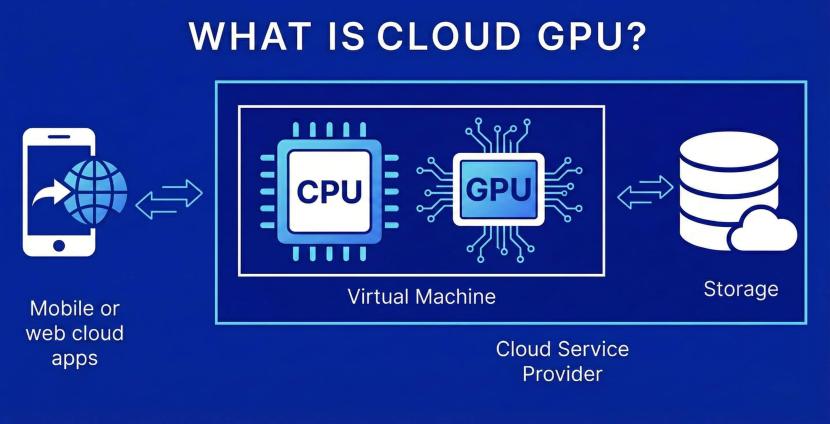

How does a cloud GPU work?

A cloud GPU is essentially a remote graphics processor that can be used over the internet to boost performance for demanding workloads. Instead of buying expensive hardware yourself, you simply use a provider that gives you access to strong GPUs on demand. It’s an easy, affordable way to give your applications — especially heavier workloads like AI models, machine learning, or 3D work — serious computing power without buying expensive on-prem equipment.

Behind the scenes, Cloud GPUs work by using something called GPU virtualisation. This allows the cloud provider to split physical GPU cards into multiple secure, isolated pieces, each piece becomes its own “instance”, with its own memory and processing power. As a result, you can choose whichever size suits your workload, whether you’re testing on a small scale or running more demanding training tasks and scale as needed.

Using a cloud GPU is straightforward. You can access it through APIs, dashboards, or automation tools, which makes integration into existing pipelines easier. With Cloud GPUs, you can manage all your tasks remotely — from AI models to 3D rendering and high-performance simulations.This flexibility is particularly useful when experimenting with different applications or migrating from older environments.

Another reason cloud GPUs are especially handy is how easily they connect with other cloud computing services. You can link them with online storage, networking tools, or even multi-cloud platforms to build flexible AI infrastructures that scale as your needs shift. Many teams prefer this option because it reduces the cost and complexity of buying, maintaining, and upgrading physical hardware. And unlike on-prem GPUs, Cloud GPUs spare you the hassle of dealing with space, electricity, cooling, and ongoing costs.

Understanding the key differences: CPU vs GPU architecture

CPUs and GPUs both handle processing, but they are built for completely different approaches to computing. A CPU is well suited for handling a wide range of general-purpose applications, often dealing with tasks that require sequential logic or specific instructions. A GPU, on the other hand, shines when you need massive parallel processing power and is ideal for graphics, simulations, machine learning, and AI models that rely on thousands of small simultaneous calculations.

Here’s a simple table to break down the architectural differences:

| Feature | CPU | GPU |

| Core design | Few high-performance cores built for sequential tasks | Hundreds or thousands of smaller cores optimised for parallel processing |

| Best suited for | General-purpose applications, system management, and logic-heavy tasks | AI, machine learning, rendering, simulations, and high-performance workloads |

| Memory handling | Low-latency access for fast decision-making | High-bandwidth memory for large datasets and models |

| Processing | Executes one or few complex tasks at a time | Executes thousands of lightweight tasks simultaneously |

| Flexibility | Highly adaptable for varied computing tasks | Highly efficient for repetitive, parallel tasks |

Cloud GPU vs physical GPU

Cloud GPU

- Offers on-demand performance with scalable GPUs for changing workloads.

- No upfront hardware investment or ongoing maintenance cost.

- Easy to expand processing power during AI training, rendering, or large applications.

- Integrates seamlessly with cloud computing environments, automation tools, and multi-cloud platforms.

- A strong alternative to on-prem GPU when you need more flexibility, faster setup, and less operational overhead.

Physical GPU

- Full ownership of the hardware, but fixed resources and limited scalability.

- Higher long-term costs due to electricity, cooling, and component upgrades.

- Requires manual management of security, patches, and infrastructure reliability.

- Better suited for very specific or constant workload requirements where usage doesn’t fluctuate.

- Less adaptable than cloud-based instances, especially for teams running machine learning pipelines or multiple models.

Advantages of a Cloud GPU

High performance for compute-intensive workloads

Cloud GPUs offer strong, reliable performance for demanding applications such as AI models, machine learning, rendering, and simulations. By tapping into powerful GPUs hosted by a cloud provider, you get the processing power you need without upgrading local hardware.

Scalability on demand

As your workloads grow — from testing new models to handling bursts in data processing — you can scale GPU resources in seconds. This level of flexibility simply isn’t possible with fixed on-prem equipment.

Cost-efficiency and reduced hardware investment

Cloud GPUs remove the upfront cost of physical cards and the ongoing costs of cooling, power, and maintenance. You only pay for what you use, which helps you budget more efficiently and makes it easier to compare prices or plan a migration from older infrastructure.

Faster deployment and reduced maintenance

Since the cloud provider manages installation, updates, and security, deployment is quick and straightforward. You can launch instances for testing, AI training, or heavier workloads without any physical setup, letting teams focus on building applications rather than managing hardware

Popular use cases for Cloud GPU

AI and machine learning workloads

Cloud GPUs are ideal for training AI models and running ML experiments that need strong performance and fast processing. They let you handle large datasets, test different architectures, and scale your resources as your workloads grow — all without buying specialised hardware.

3D rendering and graphics-intensive applications

Artists, designers, and developers use cloud GPUs to speed up rendering and visual effects. With powerful GPUs available on demand, you can reduce waiting times, run multiple projects at once, and work smoothly across various platforms and environments.

High-performance computing (HPC)

For scientific research, simulations, and other compute-heavy tasks, cloud GPUs provide the power and scalability needed to process complex calculations quickly. They are a practical option for teams who want reliable performance without managing their own HPC infrastructure.

Big data analytics and simulations

Cloud GPUs excel at handling huge volumes of data, making them useful for analytics, forecasting, and large-scale simulations. By pairing GPU processing with other cloud computing services, organisations can explore insights faster and adapt capacity to match peak usage.

Security and compliance considerations

Cloud GPUs use strong isolation to keep your data, models, and workloads separate from other tenants. Virtualisation ensures secure processing on each GPU, while the cloud provider manages patches and protective measures across the underlying infrastructure.

Most platforms follow recognised compliance standards, offering encryption, controlled access, and secure networking across different environments. This helps you maintain a reliable, compliant service while scaling GPUs, migrating workloads, or integrating with wider cloud computing tools.

How to choose your Cloud GPU

Performance requirements (memory, cores, processing power)

Start by matching the GPU’s memory, core count, and processing capacity to your workloads. AI models, rendering, and machine learning often need higher performance and more specialised hardware, while lighter applications may run comfortably on smaller instances.

Scalability and flexibility for changing workloads

If your usage varies or you expect rapid growth, choose a setup that scales easily. Cloud GPUs make it simple to adjust resources on demand, offering the flexibility needed for evolving workloads, testing, or multi-stage training cycles.

Cost assessment and pricing models

Compare pricing based on your actual usage patterns. Some teams prefer hourly or monthly billing, while others benefit from reserved options for predictable workload levels. Keep an eye on overall costs, including storage and network traffic, when planning upgrades or migration.

Integration with existing cloud infrastructure

Ensure the Cloud GPU fits smoothly into your current infrastructure, tools, and deployment pipelines. You can pair GPU instances with storage, orchestration frameworks, and other cloud computing services to streamline operations.

Networking and data transfer speeds

Fast networking helps when moving large datasets or running distributed models. Look for high-bandwidth options, low-latency links, and smooth integration across platforms and environments, especially for AI infrastructure or multi-node training.

Support, reliability, and SLA

Reliable support and clear SLAs help ensure stability throughout your service lifecycle. This is important when running critical applications, managing multiple pipelines, or relying on a cloud provider for long-term operations.

Implementing cloud GPUs with OVHcloud

Available GPU instances and configurations

OVHcloud provides GPU instances suited to everything from quick experiments to large AI training and high-performance workloads. You can pick configurations based on memory, processing needs, or the specific requirements of your applications, whether for machine learning, rendering, or data-heavy models.

API integration, automation, and orchestration tools

Deployment and scaling are straightforward thanks to API access and automation tools. These allow you to manage resources consistently across different environments, making migration and orchestration smoother when shifting workloads to cloud GPUs.

Best practices for maximising performance and cost efficiency

For the best balance of performance and cost, match GPU types to your workload, monitor usage, and avoid over-provisioning. Pairing Cloud GPUs with storage, networking, and other cloud computing services helps maintain efficiency as you scale AI infrastructure or run multiple models.

Accessing cloud GPUs with OVHcloud

OVHcloud offers scalable Cloud GPU instances, predictable pricing, and easy integration with wider cloud computing services, making it simple to run AI models, machine learning pipelines, and other high-performance workloads without managing physical hardware.

GPU instances

Discover flexible, on-demand GPU instances designed for AI training, rendering, and compute-heavy applications. Pick from multiple configurations optimised for memory, processing power, and advanced workloads, so you can launch projects quickly and scale effortlessly across environments with the strong performance you need.

GPU dedicated servers

Run AI training and compute-intensive tasks on GPU dedicated servers designed for maximum control and performance.With exclusive access to powerful GPU hardware and full customisation of your environment, you can push large models, manage complex pipelines, and scale confidently from testing to production.

High-performance solutions

Access specialised compute options for simulations, analytics, HPC tasks, and data-intensive workloads. These solutions deliver consistent performance, integrate smoothly with GPU resources and dedicated servers, and provide a reliable foundation for complex, multi-stage projects. You can compare them by use case to find the one that fits your needs best.