What is High Performance Computing (HPC)?

High Performance Computing (HPC) refers to the implementation of supercomputers and computer clusters to solve complex computational problems at huge speeds. This technology leverages the power of multiple processors working in parallel to handle massive datasets, often performing at speeds more than a million times faster than conventional desktop or server systems.

These systems can perform quadrillions of calculations per second, far exceeding the capabilities of a typical desktop computer or workstation. These systems are often used to tackle large problems in science, engineering, and business. Think computational fluid dynamics, data warehousing, transaction processing, and the building and testing of virtual prototypes.

Why is HPC important?

This type of system is crucial for several reasons. Firstly, it enables the processing of massive amounts of information and complex calculations at phenomenal speeds, which is essential in today's information-driven world. This high speed is vital for real-time needs, such as tracking developing storms, testing new products, or analysing stock trends.

There’s also a role in advancing scientific research, artificial intelligence, and deep learning. Indeed, these systems have been instrumental in accelerating U.S. scientific and engineering progress, contributing to advancements in climate modelling, advanced manufacturing techniques, and national security.

The importance carries across various industries, including healthcare, aviation, and manufacturing, to manage complex workloads and process data in near-real time. Broadly speaking, HPC-supported simulations can reduce or even eliminate the need for physical tests, saving time and resources.

How does HPC work?

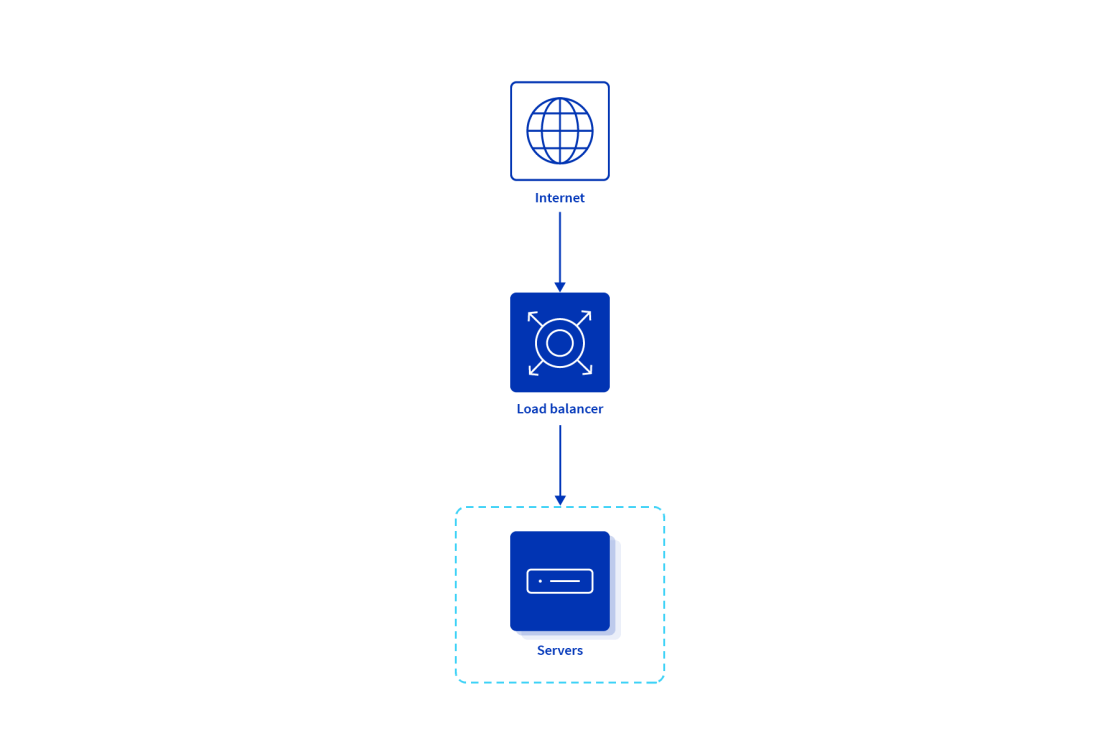

HPC systems are typically composed of hundreds or thousands of compute servers, also known as nodes, networked together into a cluster. This is known as parallel processing, where multiple tasks are executed simultaneously across the nodes.

To operate at maximum performance, each component of the system, including the storage and networking components, must keep pace with the others. For instance, the storage component must be able to feed and ingest information to and from the compute servers as quickly as it is processed. If one component cannot keep up with the rest, the performance of the infrastructure suffers.

HPC in cloud computing

HPC in cloud computing integrates the computational power and scalability of traditional HPC with the flexibility and on-demand nature of cloud services.

In a cloud environment, users can access and utilise vast computing resources, including processing power, memory, and storage, to perform complex and resource-intensive tasks. These tasks involve simulations, scientific research, data analysis, and other computationally intensive workloads that require significant computational resources.

HPC workloads require low-latency and big-bandwidth networking for efficient node communication, and cloud providers offer fast interconnect options to facilitate this communication. Efficient data movement is also crucial, and cloud platforms provide tools and solutions for securely transferring large datasets to and from the cloud.

The benefits of deploying cloud-based high-performance systems include scalability, allowing users to scale their computational resources based on their needs, and cost efficiency, as cloud-based models enable users to pay for the resources they implement, avoiding the need to invest in and maintain expensive on-premises infrastructure.

What is an HPC cluster?

A high performance computing cluster is a collection of interconnected servers, known as nodes, that work together to perform complex computational tasks at phenomenal speeds.

These clusters are designed to handle large-scale processing and are connected via a fast interconnect, allowing them to communicate and handle information efficiently. The nodes within a cluster can be specialised to perform different types of tasks, and they typically include components such as CPU cores, memory, and disk space, similar to those found in personal computers but with greater quantity, quality, and power.

Login nodes

Login nodes act as the gateway for users to access the cluster. They are for tasks such as preparing submission scripts for batch work, submitting and monitoring jobs, analysing results, and transferring data. These nodes are not intended for running computational jobs or compiling software, which should be done on compute nodes or through an interactive session if necessary.

Compute nodes

Compute nodes are the workhorses of a cluster, performing most of the numerical computations. They may have minimal persistent storage but are equipped with high DRAM memory to handle the computational workload. These nodes execute the workload using local resources like CPU and, in some cases, GPU.

Large memory nodes

Large memory nodes are specialised nodes within a cluster that have a significantly larger amount of memory compared to regular compute nodes. They are designed to handle jobs with larger memory requirements and are essential for workloads that do not fit well into the memory space of standard nodes. Jobs that require more memory than what is available on standard nodes are typically run on these large memory nodes.

GPU nodes

GPU nodes are equipped with Graphical Processing Units (GPUs) in addition to CPU cores. These nodes are optimised for computations that can be run in parallel on a GPU, which can significantly accelerate certain types of calculations, particularly those that are well-suited to the architecture of GPUs.

Reserved or specialist nodes

Reserved or specialist nodes are nodes within a cluster that are configured for specific tasks or reserved for certain users or groups. These nodes may include accelerators or other specialised hardware to cater to particular computational needs or workloads that require unique resources not found in the standard compute nodes.

HPC use cases

HPC computing is only optimal for some needs. One of the main challenges is the cost associated with these systems. While HPC can process large amounts of information quickly, they may not be necessary for tasks that do not require such speeds or large volumes. However, high performance computing is critical in many environments – without HPC, some tasks simply won’t be possible.

Optimal Use Cases for HPC

HPC is optimal for a variety of needs across different industries and scientific fields. Here are some specific examples where it is particularly well-suited:

- Healthcare and life sciences: HPC is for processing data in near-real time for diagnoses, clinical trials, or immediate medical interventions. It is also for studying biomolecules and proteins in human cells to develop new drugs and medical therapies. HPC is instrumental in modelling and simulating the human brain.

- Aerospace and manufacturing: Here high-performance systems are ideal for optimising the materials used in manufacturing. Computing-driven research allows companies to create more durable components while using the lowest amount of materials. It is also used for simulating assembly lines and understanding processes to improve efficiency.

- Energy and environment: HPC is used for climate research, including modelling and simulation of climate patterns. It is also used for developing sustainable agriculture and for analysing sustainability factors. HPC is instrumental in driving discovery in nuclear power, nuclear fusion, renewable energy, and space exploration

- AI and machine learning: It’s also possible for HPC to support other forms of processing, for example using it for running large-scale AI models in fields like cosmic theory, astrophysics, physics, and management for unstructured sets.

These are just a few examples. Others include financial services where high-performance computing is used for performing analytics for financial risk assessment and market trend prediction, while media and entertainment companies implement HPC for rendering, audio, and video processing for media production. Even in government, high-performance computing delivers powerful capabilities – including analysing census and internet of things (IoT) data and engaging in large-scale infrastructure projects.

Less ideal use cases for HPC

High performance computing isn’t the ideal solution to every problem. Here are a few instances in which we’d suggest alternative solutions may be better:

Handling sensitive data

While HPC can process large amounts of information quickly, they may not be the best choice for handling sensitive information. HPC data storage operating in shared and batch mode poses challenges for sensitive information, such as health, financial, or privacy-protected information.

Although there are methods to securely process protected information on these systems, these methods often require additional measures and can impact the existing operations.

Low-volume workloads

HPC systems are designed to handle large volumes of information and complex calculations. For low-volume workloads, the time and effort required to learn and use clusters may not be justified

The investment in learning to handle Linux, shell scripting, and other skills for using HPC clusters may be better spent elsewhere if the volume of work is low.

Low volume, low memory serial workloads

HPC is not a magic bullet that will make any workload run faster. Workflows that only run on a single core (serial) and do not need large amounts of memory are likely to run slower on high-performance computing than on most modern desktops and laptop computers.

If you run a low volume of serial jobs, you will likely find your computer would have completed these quicker.

Training and educational purposes

University HPC clusters are used to facilitate large computational workloads and are not usually used as a training aid or facility.

While exceptions may be made for high-performance computing-specific training with prior engagement with staff, these systems are not typically for general training or educational purposes.

Non-legitimate or non-research purposes

HPC clusters are provided to facilitate legitimate research workloads. Inappropriate usage of cluster resources, such as mining cryptocurrency, hosting web services, abusing file storage for personal files, accessing files or software to which a user is not entitled, or other non-legitimate usage will likely result in an investigation and action.

Account sharing is also not permitted and any users/parties caught sharing accounts will also likely result in an investigation and action.

Innovations in HPC

High-performance computing has seen several significant innovations in recent years. Cloud-based solutions are becoming more prevalent, offering scalable and cost-effective resources for intensive computational tasks. This trend contributes to its democratisation, making these powerful tools more accessible to a wider range of users.

Exascale computing, capable of performing one exaflop (a billion billion calculations per second), is the next frontier in high-performance computing.

Although still in the early stages of development, quantum computing promises to deliver orders-of-magnitude improvements in performance over traditional computers. Quantum computers could solve problems currently intractable for traditional computers, opening up new possibilities for HPC applications.

HPC across various industries

What does high performance computing look like in practice? In this section, we explore some of the practical implications across industries.

High-demand industries

High-performance computing plays a significant role wherever there is a requirement for a lot of computing capacity. From health and life sciences to manufacturing, energy, and weather forecasting. It involves the implementation of powerful processors, servers, and systems to handle larger information sets, perform complex calculations, and execute data-heavy tasks more efficiently.

Manufacturing

In the manufacturing sector, it is used in problem-solving across a wide range of applications. It helps evolve legacy industries and improve more recent manufacturing sectors like additive manufacturing. high-performance computing has demonstrated its ability to save manufacturing costs and improve production.

Media and entertainment

In the media and entertainment industry, it is used to create, and deliver content efficiently. It is crucial for generating high-quality visual effects and computer-generated imagery. HPC solutions must integrate with existing tools and applications and enable efficiency at all stages of media workflows.

Energy

While specific search results for the energy sector were not provided, it's known that HPC is widely used in this industry for tasks such as modelling and simulation of energy reserves, optimising power grid distribution, and improving energy efficiency in manufacturing processes.

Health and life sciences

HPC is transforming the health and life sciences industry by enabling professionals to handle information in near-real time and generate insights that can transform patient outcomes. It supports scientific fields from research to industrial applications in the medical area, enabling researchers to run faster and more complex calculations, simulations, and analyses.

Weather forecasting

HPC is crucial in weather forecasting, where it is used to process vast amounts of meteorological data. This information is used to run complex simulations and models that predict weather patterns and events. The speed and computational power of these systems allow forecasts to be made quickly and accurately, providing valuable information for planning and decision-making in various sectors, including agriculture, energy, and disaster management.

Embarking on your HPC journey

To embark on an HPC journey, begin by assessing your current infrastructure and clearly defining your objectives, requirements, constraints, and expected outcomes for the HPC initiative.

This involves understanding the specific computational needs of the organisation and the types of workloads that will be run on the system. Once the goals and requirements are established, the next step is to select the right cloud provider and tooling that aligns with the organisation's high-performance computing needs. Look for scalable resources and specialised services tailored for HPC workloads.

It's crucial to involve executive leadership in the decision-making process to ensure alignment with the organisation's strategic direction and to secure the necessary investment. Additionally, organisations should consider best practices for running workloads, such as not overloading the system with too many jobs, efficiently using disk space, and optimising code to perform optimally.

Explore all HPC solutions

OVHcloud offers high-performance computing (HPC) solutions that provide fast and efficient computing capabilities without the need for upfront costs. Our global network includes 46 datacenters and 44 redundant PoPs in 140 countries, ensuring low latency and quick delivery of HPC capacity when needed covering clients for needs such as machine learning and advanced data processing.

That includes a broad range of HPC solutions, including innovative hardware maintained by a dedicated server innovations team, and specialised cloud GPU servers for intensive use such as GPU for AI.

OVHcloud's solutions are designed to cater to a variety of needs, such as video on demand, live streaming, interactive gaming, running novel simulations, re-evaluating financial models, or analysing large-scale datasets. OVHcloud offers the flexibility to use HPC exactly when you need it, without having to own the infrastructure.

OVHcloud and High Performance Computing (HPC)

Choose the best dedicated server for your business applications

With OVHcloud, you can rely on our expertise in bare-metal technology. Host your website, deploy your high-resilience infrastructure, or customise your machine to suit your projects in just a few clicks.

Your private datacentre in the cloud

Accelerate your digital transformation with our scalable Hosted Private Cloud solutions. Our products are agile, innovative, and deliver optimal security for your data – so you can focus on your business.

Discover our networking solutions

Your applications and their components need to be accessible 24/7, all year round. Whether it's private, public, or on-prem connectivity, our solutions help you meet your networking needs, and our private networks ensure secure communication between your servers and services. OVHcloud also operates its own backbone. The OVHcloud network backbone is designed to ensure fast and reliable access to your applications - anywhere, at any time. You keep complete control of your data. No exceptions.